What is generative AI?

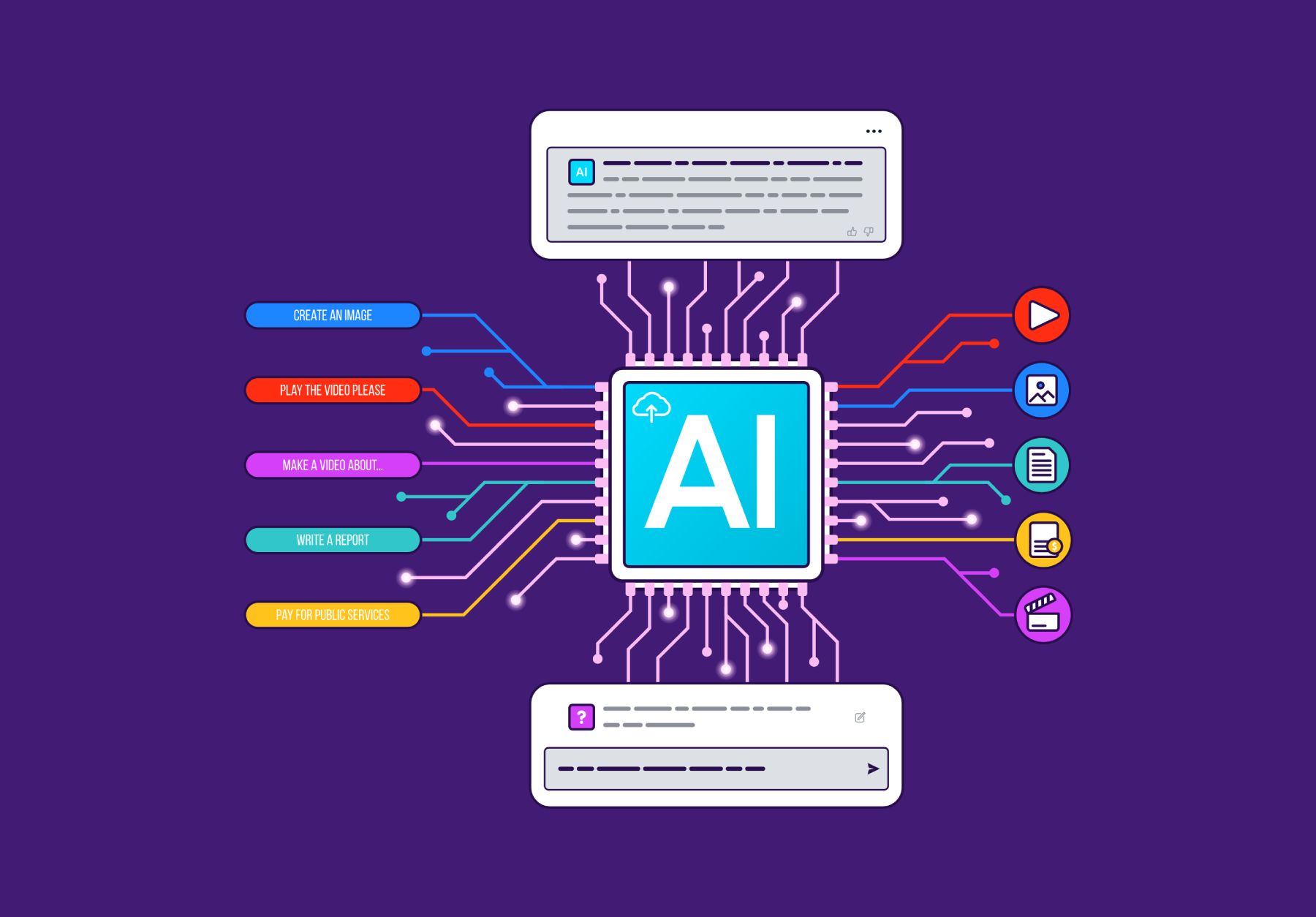

Let’s start by building a definition of generative AI. Generative AI (GenAI) refers to a category of artificial intelligence that leverages advanced Machine Learning techniques, particularly Deep Learning models, to create new content by learning patterns and structures from existing data. Today, the most advanced generative AI solutions—particularly Large Language Models (LLMs) and diffusion models—are built entirely on Deep Learning, with minimal reliance on traditional Machine Learning approaches. GenAI is able to generate novel content, often imitating human creativity, while producing outputs that are distinct yet similar to its training data. This is in contrast to traditional AI systems that are designed for specific tasks like classification or prediction. Let’s take a closer look at how generative AI works on a more technical level now that we understand its meaning. GenAIs use Deep Learning architectures to model complex patterns in data in order to produce new content.

These models look at huge datasets during training to find connections between words, colors, and shapes. These relationships are encoded into structured mathematical representations. They can take a variety of forms, such as embeddings in language models or latent spaces in models for images, audio, and other high-dimensional data, depending on the type of model. Techniques like self-attention (used in transformer models) allow AI to focus on the most relevant parts of input data, improving coherence and contextual accuracy. Other architectures, such as GANs or VAEs, use different mechanisms to achieve similar results.

How this works varies depending on the output. For example, in text generation, the model predicts the next word based on preceding words. This is a little different in image generation, where diffusion models create images by progressively removing noise from a random starting state, gradually forming coherent visuals.

This process isn’t perfect and requires constant improvement. Deep Learning specialists employ a variety of methods to improve the outcome of the models. This might involve improving the quality of the dataset, upgrading model architecture, fine-tuning parameters, or a variety of other approaches.

Where exactly did generative AI originate? One of the mathematical foundations of artificial intelligence can be found in Markov Chains, first introduced by Russian scholar Andrey Markov in 1906. This paper demonstrated how probabilities could describe sequences of events. While early AI in the 1950s and 1960s was primarily based on symbolic reasoning and rule-based systems, probabilistic models like Markov Chains influenced later developments.

Following this, a series of innovations led to breakthroughs in neural networks, starting with the Perceptron in 1958 and the resurgence of Deep Learning in the 1980s with backpropagation. The introduction of Graphics Processing Units (GPUs) in 1999 further accelerated AI development.

In 2014, Ian Goodfellow introduced Generative Adversarial Networks (GANs), which pioneered adversarial training, allowing models to generate increasingly realistic images, audio, and video. While GANs were a significant milestone, later advances in transformer and diffusion models proved to be more impactful for today’s generative AI.

In 2017, Google researchers introduced transformers, a new neural network architecture that revolutionized how AI processes sequential data. Transformers use self-attention mechanisms to analyze relationships between elements in a sequence, enabling models to understand context more effectively than previous architectures. This is achieved by converting text into tokens, which the model processes mathematically to identify patterns and meaning. The introduction of transformers paved the way for today’s generative AI, forming the foundation of Large Language Models (LLMs) like GPT-4.

The initial cutting-edge generative AI models made use of transformers and Deep Learning were released in 2020. One of the most significant breakthroughs was GPT-3, which set new standards for natural language generation. Over the next few years, multimodal generative AI models emerged, including DALL·E (2021) for text-to-image generation, Stable Diffusion (2022) as an open-source alternative, and Google Bard (now Gemini) in 2023 for conversational AI. Since then, these models have become increasingly sophisticated, driving advancements in foundational AI systems that power a wide range of applications.

Foundational and Task Driven Models

Generative AI models have a number of different classifications. At the highest level, there are two types of generative AI: Foundational Models and Task-Driven Models. Large, general-purpose AI systems known as Foundational Models can be used as a foundation for a wide range of tasks. This includes some of the most well-known generative AI models, such as GPT-4, which powers ChatGPT. Typically, Task-Driven Models have a smaller scope and are designed to excel in particular domains or tasks. Some are fine-tuned versions of Foundational Models, while others are built from the ground up for specialized applications.

Within these classifications, generative AI is built on different AI approaches, including the following:

Symbolic AI: Uses explicit rules, logic, and symbols to represent knowledge and perform reasoning.

Machine Learning: Enables systems to learn from data and improve performance without being explicitly programmed.

Supervised Learning: Trains models on labeled data to predict outcomes for new, unseen inputs.

Unsupervised Learning: Identifies patterns and structures in unlabeled data without predefined outcomes.

Reinforcement Learning: Teaches agents optimal behaviors through trial and error by receiving rewards or penalties.

While many of the most famous generative AI models are Foundational Models, this isn’t always the case. For example, OpenAI’s Jukebox is a Task-Driven Model designed specifically for music generation rather than general-purpose applications.

Beyond their intended purpose, the key differences between Foundational and Task-Driven Models include training data, architecture, training methodology, and scale. Typically, Foundational Models are trained on diverse datasets, using unsupervised or self-supervised learning, often followed by fine-tuning with supervised techniques. This is a computationally intensive process that can involve billions of parameters. This upfront investment in training makes a foundational model highly versatile, enabling it to be applied across a wide range of use cases.

Examples of Foundational Models include:

GPT-4: A large language model that generates human-like text for diverse tasks, such as writing, coding, and summarization.

DALL·E: An image-generation model that creates unique visuals from textual descriptions, covering a wide range of styles and concepts.

Stable Diffusion: An open-source model that generates high-quality images based on prompts, enabling customization and flexibility in artistic creation.

Claude 2: A conversational AI model developed by Anthropic, designed to generate human-like text and engage in dialogue.

These models are valuable because they allow generative AI to be applied across multiple domains, from text and image generation to automation and personalized content creation.

Generative AI use cases

Generative AI has a wide variety of use cases, both serious and fun. For example, if you’re stuck on what to say or want to explore new ideas, ChatGPT can serve as a brainstorming assistant, offering diverse perspectives and helping you refine your ideas. One thing to note about generative AI tools is that they do not possess human-like creativity but can generate novel outputs by recombining and transforming existing patterns in complex ways. These tools are designed to augment human creativity, not replace it.

GenAI has a broad range of applications across various industries:

Understanding user intent

A key challenge for marketers is interpreting what a user actually wants to do. With third-party tracking cookies being phased out, marketers face increasing challenges in understanding user behavior and reaching the right audience. Without tracking cookies, cookieless marketing on the open web typically relies on context marketing. This requires processing billions of articles to identify the right ones to advertise on.

RTB House’s history of using Deep Learning models laid the foundation for our most recent advancement in GenAI called IntentGPT. This will help marketers understand what their users want without needing to rely on outdated privacy-invasive tracking methods, like third-party tracking cookies.

Generating copy

Generative AI for text can help to create high-quality content at scale. Tools like GPT-4 and Jasper AI can generate blog posts, ad copy, emails, and even social media posts. Generative AI can help people navigate their daily lives, especially if they sometimes struggle to find the right words, with the right prompts. There are also some GenAI tools designed to help with very specific tasks. For example, Persado analyzes emotional triggers to create persuasive ad copy.

Creating and testing visual content

Visual content is a key component of modern life. Generative AI like DALL·E, Canva Magic Design, and Stable Diffusion enables users to produce unique visuals quickly and affordably. This helps people with limited resources get their projects off the ground by allowing them to generate visuals based on simple descriptions without needing to hire an artist at the start. For example, DALL·E can generate an image of a vibrant beach scene or book cover in seconds.

In a corporate context, generative AI tools also simplify A/B testing for visual creatives. By generating variations of designs or ads, marketers can quickly test which resonates most with audiences. Tools like Runway ML allow.